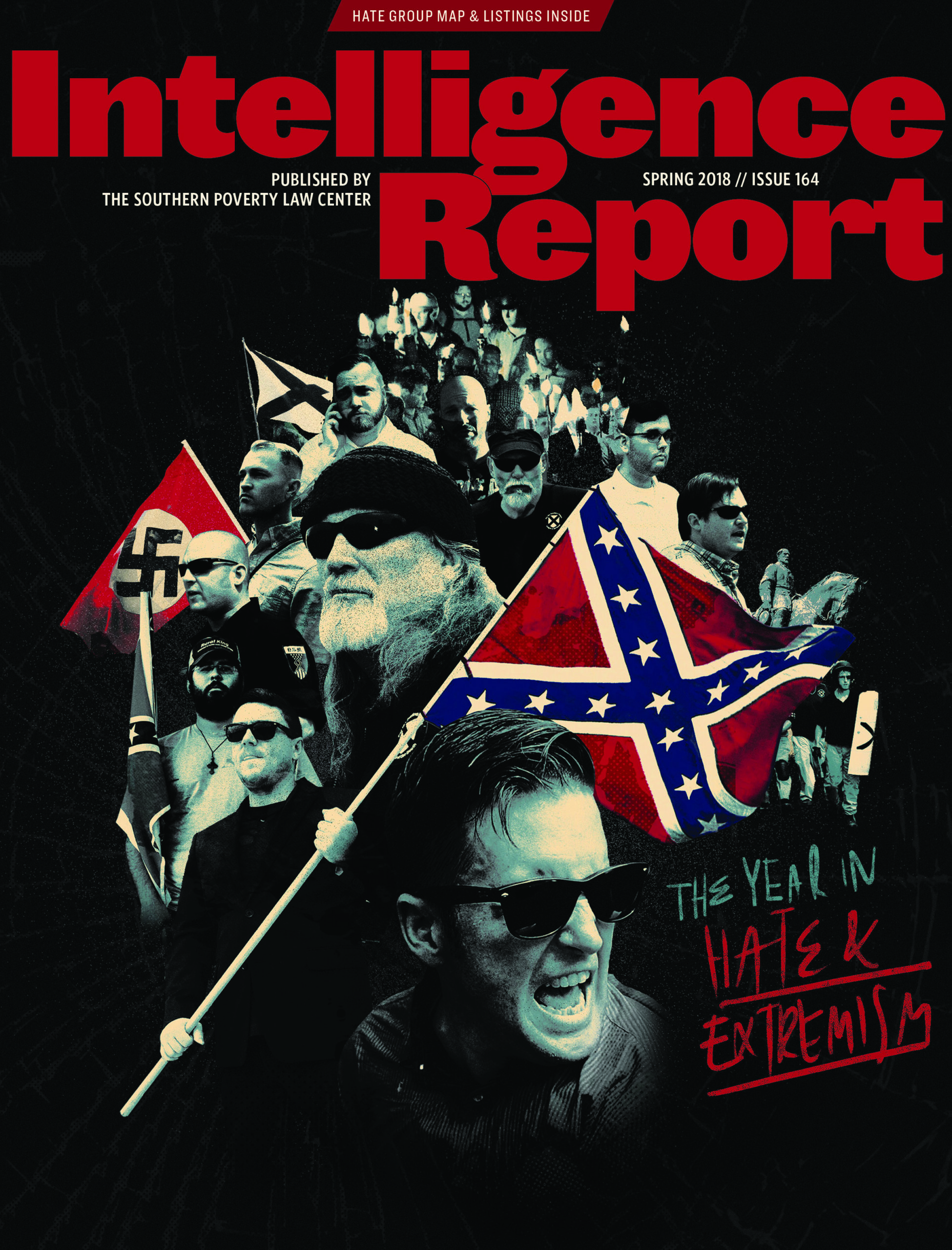

It took blood in the streets for the tech industry to finally face its domestic extremism problem. Will this newfound commitment last?

One way to assess the tech industry’s evolution in its approach to hate and extremism is to examine the Twitter account of Richard Spencer, the white nationalist leader of the racist “alt-right” movement who advocates for “peaceful ethnic cleansing.”

In the beginning of 2016, Spencer had a mere 6,000 followers on the social media platform. Right before the presidential election, Spencer’s Twitter followers had grown to 19,000. After his infamous “Hail Trump” speech celebrating the president-elect — where audience members were captured on video giving Nazi salutes — his follower count burgeoned to nearly 30,000. Today, that number has more than doubled. Spencer now reaches an audience of 80,000 followers.

This past November, however, Spencer’s account lost the coveted blue check mark, which according to Twitter is a way to verify the accounts of public figures like celebrities, politicians and members of the media. But the blue check marks also convey (and help build) authority and influence — something Spencer clearly craves.

Spencer lost his status at the same time Twitter announced major new rules that, among other details, prohibit behavior that “incites fear about a protected group” and bans “hate symbols.” Accounts that violate these new rules would be suspended, the company announced, and on December 18 the #TwitterPurge arrived. The network of alt-right accounts that proliferated in step with Spencer watched as Jared Taylor, the Traditionalist Worker Party, Bradley “Hunter Wallace” Griffin and a handful of other accounts were booted from the platform.

As this issue went to print, however, Spencer and a large number of other prominent white nationalists survived the ban’s first wave. What Twitter is trying to accomplish is not quite clear.

Civil rights organizations and members of communities who have borne the brunt of organized harassment campaigns and a steady river of online abuse have long been sounding the alarm, lobbying Silicon Valley to rein in hate groups exploiting the openness of their platforms. Some companies, like Apple and Amazon, were quick to embrace and enforce community guidelines that dealt with extremists.

But for the majority of companies, including the “Big Three” — which comprises Facebook, Google and particularly Twitter — Silicon Valley clung to its foundational libertarian ethos that placed the full burden of policing their communities on users. For some companies, this approach neatly dovetailed with Wall Street valuations that hinged on the growth of their user base — a force that also encouraged companies to lower wages and rely on contractors for important work like content moderation.

Despite all this, 2017 brought the beginning of important changes in policy. What happened?

Unite the Right

Charlottesville broke the dam.

In the days following the violence of August 12, the entire tech industry — from social media companies to domain registrars to payment platforms — moved to ban the accounts of prominent participants and organizers of the “Unite the Right” rally.

In a post at his website Counter-Currents, white nationalist Greg Johnson called the moment a “catastrophe.” His operation, along with many other critical institutions within the white nationalist movement, felt the crackdown immediately, especially when it came to payment platforms like PayPal.

“These moves by PayPal and Facebook are obviously part of a coordinated Leftist purge of white advocacy groups in the aftermath of Unite the Right,” Johnson wrote.

“The state and the rabble are conspiring to physically assault us in the streets, while corporations are conspiring to censor and deplatform us. We have to keep fighting. They can whack us down in one place, but we’ll pop up in five others. It is discouraging and exhausting, but it is worth it, since we are doing nothing less than saving the world.”

In reality, the platforms were finally acting on longstanding terms of service and acceptable use polices they had previously rarely enforced. It took blood in the streets for companies to act.

In a stunning sequence of events, The Daily Stormer, the most influential hate site on the web, was also driven off the internet for its support of the rally. First GoDaddy dropped its domain and then Google seized it. Matthew Prince, the CEO of CloudFlare, canceled their services that replicated the neo-Nazi site in data centers around the world. “I woke up this morning in a bad mood and decided to kick them off the Internet,” Prince wrote. (CloudFlare still protects numerous white supremacist websites.)

Stormfront, whose users have included a number of violent white supremacists, also had its domain seized and went dark for the longest period of time since it first came online in 1996. In late September, Stormfront returned to the web.

For a lot of hate groups, the writing has been on the wall. One of the scheduled headliners of the Charlottesville rally was Pax Dickinson, a technologist, and leader of what’s been called “alt-tech”— a movement to develop alternative services tailor- made to support extremists, especially those kicked off mainstream platforms.

This alt-tech consists of alternatives to Twitter (Gab.ai, where Daily Stormer’s Andrew Anglin is active), Patreon (Hatreon, developed by Cody Wilson), and Kickstarter (GoyFundMe) among others. Both Hatreon and GoyFundMe, the latter founded by Matt Parrott of the Traditionalist Worker Party, have been designated as hate groups by the SPLC for their role in financing the hate movement.

The alt-right movement also adopted the cryptocurrency Bitcoin, which Spencer has called the “currency of the alt right.” The Bitcoin account for neo-Nazi hacker Andrew “Weev” Auernheimer, the man responsible for keeping The Daily Stormer on the internet, has received over $2 million worth of the cryptocurrency.

The movement has created these platforms out of necessity and it’s clearly a loss for them. The inability to access large audiences on mainstream platforms diminishes their ability to fundraise and recruit. This quarantine effect is a step forward.

There were two other developments in 2017 that might point to a way forward in effectively dealing with extremism online.

In Germany, a new law requiring social media companies to remove hateful and extremist content was passed in the summer. Companies will face fines up to 50 million euros for failing to remove content within 24 hours to seven days. Facebook hired 500 new employees in an effort to comply.

Beginning January 1, 2018, when a transition period ended, Germany became a natural experiment in the efficacy of a government-enforced approach to curtailing online hate in a country where Holocaust denial, racist and antisemitic speech and incitement to hatred are all outlawed.

As Germany began to implement its law, international Twitter users discovered that changing their location settings to Germany dramatically decreased the amount of hateful content a user interacted with on the platform. If the German experiment succeeds — with a minimal impact on otherwise legal freedom of expression — it might pave the way for more European countries with hate speech laws to enact similar regulations. A new European framework along these lines might then force the biggest tech companies to apply similar approaches globally — including in the United States.

In October, researchers at Emory University, Georgia Institute of Technology and the University of Michigan published a study on Reddit, the eighth biggest website in the world. In 2015, Reddit banned a number of toxic “subreddits,” or forums, including the virulently racist /r/CoonTown.

After examining more than 100 million posts, the researchers concluded that the bans of two subreddits largely worked. When the forums were banned, the researchers found that the poisonous discussions didn’t migrate to other forums on the site, and that many users left Reddit altogether. For users that stayed on the site, researchers found that their use of hate speech decreased by 80 percent.

“One possible interpretation, given the evidence at hand, is that the ban drove the users from these banned subreddits to darker corners of the internet,” the authors wrote. “The empirical work in this paper suggests that when narrowly applied to small, specific groups, banning deviant hate groups can work to reduce and contain the behavior.”

A few weeks after the encouraging findings were published, Reddit banned /r/incel, another infamous forum known for its prolific misogyny — a critical ingredient behind the resurgent white nationalist movement.

While there’s no doubt that the challenge facing tech companies is vast and complicated, clearly more needs to be done. The post-Charlottesville moment has provided good examples of companies taking action where they’ve formerly been reluctant to do so, and they have been largely rewarded for it. They should go further. And unless they do, people like Richard Spencer will continue to see their influence grow despite advocating for ideas that so clearly conflict with the stated values of the platforms they exploit.